Biography

I am a Postdoctoral Fellow at King Abdullah University of Science and Technology (KAUST), Saudi Arabia, specializing in Generative AI. I obtained my Ph.D. degree in Computer Vision and Deep Learning from Linköping University, Sweden under the supervision of Prof. Michael Felsberg and Prof. Fahad Shahbaz Khan.

I have 11 years of diverse interdisciplinary experience through academic and industrial roles across Sweden, Egypt, and Saudi Arabia.

Areas of Expertise

Diffusion Models Vision Foundation Models Large Language Models (LLM) Retrieval-Augmented Generation (RAG) Multi-Agentic Systems Image/Video Object Detection/Segmentation/Tracking Depth Estimation/Completion Uncertainty in Deep Learning Optical Flow Estimation AI for SportsUpdates

Paper Accepted at NeurIPS 2025 as a Spotlight

"Mind-the-Glitch" has been accepted at NeurIPS 2025 as a Spotlight (top 3% of submissions)

June 2025 View PublicationPaper Accepted at ICCV 2025

"EditCLIP: Representation Learning for Image Editing" was accepted at ICCV 2025.

June 2025 View PublicationPaper Accepted at SIGGRAPH 2025

"PartEdit: Fine-Grained Image Editing using Pre-Trained Diffusion Models" will be presented at SIGGRAPH 2025 in Vancouver, Canada.

March 2025 View PublicationNew Preprint Released

"EditCLIP: Representation Learning for Image Editing" was published on Arxiv.

February 2024 View PublicationPaper Accepted at ICLR 2025

"Build-A-Scene: Interactive 3D Layout Control for Diffusion-Based Image Generation" will be presented at ICLR 2025 in Singapore.

Jan 2025 View PublicationPaper Accepted at CVPR 2025

"Zero-Shot Video Semantic Segmentation based on Pre-Trained Diffusion Models" will be presented at CVPR 2025 in Nashville, USA.

Jan 2025 View PublicationSelected Publications

Mind-the-Glitch: Visual Correspondence for Detecting Inconsistencies in Subject-Driven Image Generation

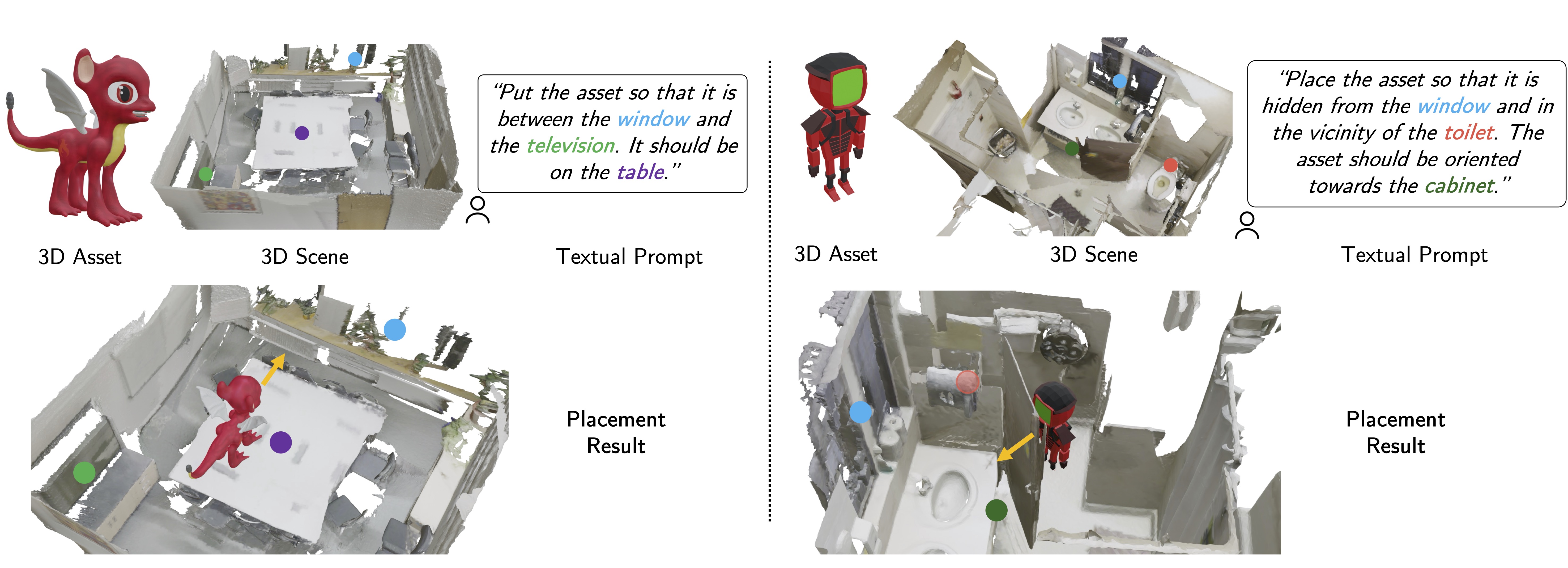

ZeroKey: Point-Level Reasoning and Zero-Shot 3D Keypoint Detection from Large Language Models

Build-A-Scene: Interactive 3D Layout Control for Diffusion-Based Image Generation

PartEdit: Fine-Grained Image Editing using Pre-Trained Diffusion Models

Zero-Shot Video Semantic Segmentation based on Pre-Trained Diffusion Models

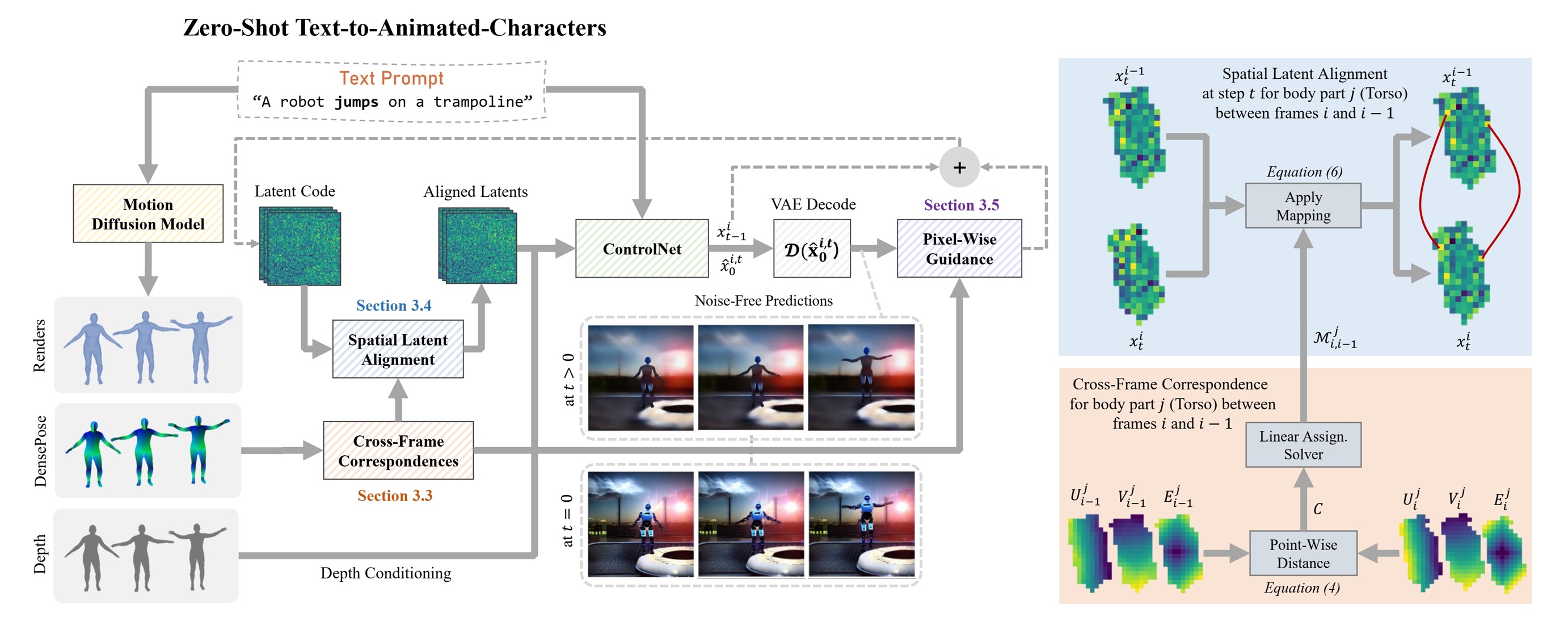

LATENTMAN: Generating Consistent Animated Characters using Image Diffusion Models

Teaching

Current Teaching - KAUST (2023-Present)

Deep Learning

KAUST, MSc level

Generative AI

KAUST, MSc level

Previous Teaching - Linköping University (2016-2021)

TSBB08 Digital Image Processing

Linköping University, BSc level

TSBB09 Image Sensors

Linköping University, BSc level

TSBB31 Medical Images

Linköping University, BSc level

TSBB06 Multidimensional Signal Analysis

Linköping University, MSc level

TBMI26 Neural Networks and Learning Systems

Linköping University, MSc level

TSBB17 Visual Object Recognition and Detection

Linköping University, MSc level

Student Supervision

I have supervised more than 20 postgraduate students on various thesis topics in computer vision, deep learning, and generative AI. A selection of supervised theses can be found on my university profile.

Contact

Awards and Honors

- Best Paper Award at VISAPP conference (2021)

- Affiliation to the Wallenberg AI, Autonomous Systems and Software Program (2017)

- Graduate Scholarship from Nile University for master's degree (2013)

- Honor award for outstanding performance in BSc from Mansoura University (2011)

- One of the Top 10 BSc graduation projects in Egypt by IEEE Gold (2011)